Liveness detection for face recognition

Liveness application for mobile and web user registration and authentication. Over 200,000 checks per day worldwide.

As easy as taking a selfie.

General architecture:

Liveness Mobile\WEB SDK: for action control and video recording.

Liveness Server API: to capture the best shot and protect against biometry attacks.

What is the function of the mobile and WEB SDK?

We have developed client-side mobile and WEB SDKs for embedding into your WEB site and mobile application. We recommend using our SDKs to control shooting conditions, face position, protect against "deepfake" attacks and "video stream spoofing.

Control of conditions

In some cases, external conditions do not allow taking a clear photo or video of the face for further biometric identification and verification for Liveness.

Since users can check in various situations: outdoors, in the dark, on the move, in bright light, our SDK automatically detects shooting conditions (darkening, blurring, flashing) and illuminates the face in the absence of light or recommends choosing a more favorable conditions for shooting.

Control of the position of the face relative to the camera

Liveness verification aims to protect against spoofing attacks and select the best shot for further biometric identification. Face recognition algorithms show the best results on images corresponding to the VISA or VISABORDER format.

See what NIST dataset formats are

The more freely and from different angles a face is photographed, the more likely it is for face recognition algorithms to fail. If the face is cut off, rotated, tilted, or very distant into the shot, this may affect the fact that it may not be found, or the error will significantly increase.

Using our SDK, you can always be sure that the entire biometric identification or authentication process will proceed as quickly as possible.

Protection against attacks "video stream spoofing"

The security of the biometric identification/authentication process depends not only on the threats of spoofing attacks but also on specific attacks by software tools that create a virtual camera and “replace” the original video.

Such attacks exploit vulnerabilities in filming and sending media content, attacking the recording device itself.

We have implemented footage originality verification tools in our SDKs to verify that the footage is genuine and used the original camera.

Our algorithms are based on deep machine learning, check shots from the video, and track dozens of parameters (presence of glare and reflections, micromotions, pulse, etc.)

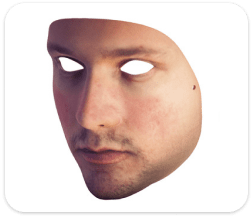

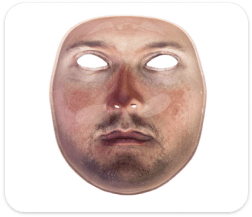

We have trained our system on tens of thousands of attacks. We also work with manufacturers of 3D masks and constantly searching for new samples.

This approach allowed our Oz Liveness solution to pass ISO 30107-3 Standard certification Level 1 and 2 at the NIST-accredited iBeta laboratory with 0% FAR.

Do you want to compete with our technology? Try our challenge now and find the fake!

CheckWhat is iBeta test, ISO 30107 certification?

iBeta is nationally accredited as a test lab by the National Voluntary Lab Accreditation Program (NVLAP Testing Lab Code 200962) to ISO/IEC 17025:2017 requirements for the competence of testing and calibration laboratories).

In 2011, iBeta was accredited by NIST and became a biometrics expert in the National Voluntary Laboratory Accreditation Program (NVLAP) for Biometric Testing under NIST handbook 150-25.

In addition, iBeta procedures against the ISO 30107-3 Presentation Attached Detection (PAD) standard were audited by our accrediting body, and iBeta’s Scope of Accreditation was increased to include conformance testing to the ISO 30107-3 standard in April 2018.

As the subjects were cooperative, each species appeared as a natural face duplication (meeting the requirements of Property 1 and 2). All of the face feature captured in the artifacts contained extractable elements as they were acquired from the genuine subject (meeting the requirement of Property 3). In some cases, hats and glasses were added to the artifact during the presentation attack.

How is testing done?

The iBeta laboratory prepares artifacts for attacks. Artifacts for the testing consisted of six species:

- a

2D photo on matte paper with edges cut

- b

2D photo on a matte paper presented on a curved surface

- c

2D Mask with eyes cut out

- d

The photo displayed on a laptop or iPad

- e

3D Handmade paper mask

- f

Video shown on laptop or iPad

Testing:

A sequence of Liveness checks is made using attack artifacts: one original check and three attacks. This sequence is repeated 50 times for each attack.

Results:

On iPhone 6s

Of the 300 original Liveness tests that were performed with a real face, 299 were successful.

The error false positive of Oz Liveness was less than 1%. At the same time, not a single attack was missed, i.e., the accuracy of detecting attacks is – 100%.

VIEW DETAILED REPORTOn Android

300 out of 300 checks of a live person were completed successfully. All attacks were detected with 100% accuracy.

Thus, the error false positive of Oz Liveness is 0%. The detection accuracy is 100%.

Liveness Specification

Our architecture supports various implementations of the Liveness algorithm: you can set the size of the video that will be processed and stored in your infrastructure:

-

1

Video from 1 to 5 seconds: size from 1 to 5MB, processing time 1 – 5 seconds.

Recommended:

It is recommended for remote identification in the banking sector. In some cases, regulatory requirements prescribe the recording and storage of a video file for an extended period of time, ranging from 1 to 10 years.

This option can be used to establish business relationships in sectors with remote identification requirements.

-

2

Video from 1 shot (duration up to 1 second). Size 300 KB – 1 MB.

Recommended:

For image enhancement and verification in the fintech sector, sharing economy with simplified remote identification requirements.

For biometric authentication (Confirmation of high-risk transactions, passwordless login, access recovery).

In cases where the speed of processing and transmission and, accordingly, the length of the client path is of paramount importance. Also, there are no regulatory requirements for file storage.

-

Support for most modern mobile devices and web cameras

-

Convenient SDK for embedding Android / IOS / WEB

-

The passage process – from 1 to 3 seconds to choose from

-

ISO 30107-3 Biometric PAD Standard by iBeta Level 1 certified with 0% FAR, view detailed report

-

ISO 30107-3 Biometric PAD Standard by iBeta Level 2 certified with 0% FAR, view detailed report

-

Processing speed – 1 process per second

Our algorithm is resistant to attacks:

-

Printed paper faces

-

Device screens with video playback

-

Substitution of images in the process of Liveness

-

Paper masks with cutouts

-

Animated avatars and deepfake

-

By the faces of sleeping people

Passive Liveness & Active Liveness

Active Liveness (cooperative) – invites the user to take some action (approach, wink, smile, turn the head)

The benefits of active Liveness:

In some cases, when obtaining a loan remotely or concluding an agreement, or other options for establishing business relations, the customer shall record the fact of an active action (user consent, expressed in the form of active action).

An active action means that the user has read the user agreement and has done what is asked of him: for example, he smiled or turned his head, thereby confirming that he is conscious and acting of his own free will.

Disadvantages of active Liveness:

Active Liveness requires a lengthy video (3 to 5 seconds) for the user to take action. This increases the customer path associated with the transmission and processing of the request.

Active Liveness reduces conversions by 5-10%. According to our statistics, from 5 to 10% of users do not want or cannot understand what is required of them in active Liveness (at what moment you need to smile or blink).

Detecting the best shot according to the VISA or VISABORDER standard for subsequent face comparison is complicated since, in the process of passing emotions (smile, wink), the head is tilted or turned.

Active Liveness does not affect security and protection against spoofing attacks.

The modern distribution of deepfake allows you to bypass any active liveness by animating the picture to perform the required action.

Therefore, modern approaches to countering spoofing attacks should not rely on active action. Active action should only be used to resolve disputes on the legal plane.

Passive Liveness

It does not imply any active action, except for the need to look at the camera.

The benefits of passive Liveness:

Passive Liveness does not require lengthy video and can fit in 1 shot. This simplifies transmission and processing speed up to 1 process per second.

Passive Liveness does not decrease conversions because it requires no additional action.

Passive Liveness is as safe as active. According to the VISA or VISABORDER standard, detecting the best frame occurs better on video that does not imply the presence of emotions and head turns.

Disadvantages:

Lack of proactive action can be the cause of controversial situations. However, such cases, according to our statistics, are unlikely.

Your Liveness interface

Create your Liveness check script interface with our customization libraries. Get inspired by scenario customization use cases.

Easy. Convenient. Unique.